Imagine interacting with an AI so intuitive that it feels like conversing with a top expert in your field, whether you’re discussing the complexities of financial markets, the latest in tech innovation, or complex legal matters. This is not a distant future scenario; it’s a rapidly approaching reality, thanks to the evolution of prompt engineering in language models. As AI continues to weave its way into our professional lives, understanding how to communicate with these systems effectively becomes crucial.

This article delves into the art and science of prompt engineering, exploring various techniques from zero-shot to more sophisticated methods. Whether you’re a developer, a business leader, or just AI-curious, mastering these techniques can enhance how you leverage AI tools, making them more powerful and aligned with your needs.

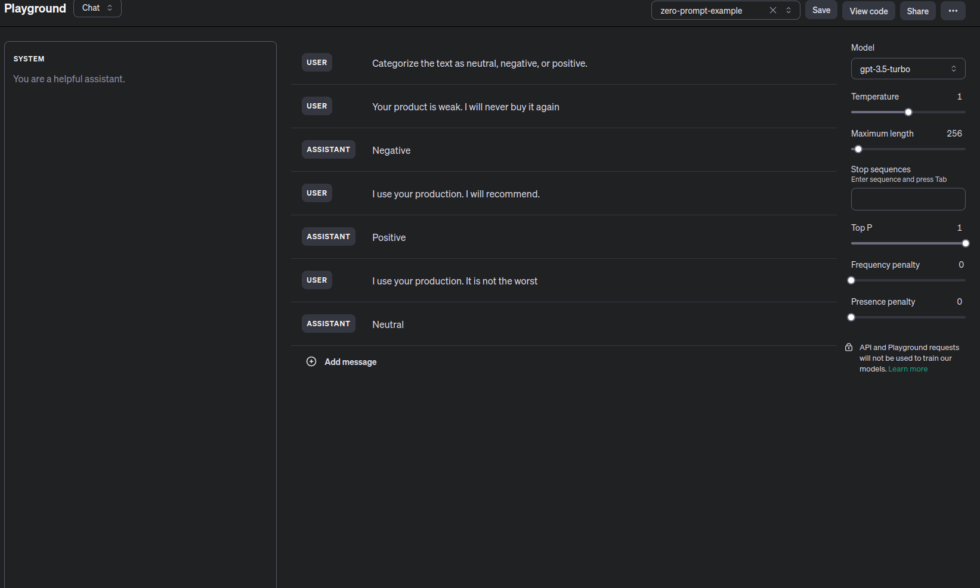

Zero-Shot Prompting: Immediate understanding with no examples needed

In AI, the ability to correctly interpret and respond to instructions without specific training is known as Zero-Shot Prompting. This technique is crucial for developing flexible AI systems that flexibly handle unexpected queries.

For example, consider a content moderation team that must classify trending slang on social media as offensive or harmless. A model trained with Zero-Shot capabilities could immediately classify the slang, regardless of specific training in that particular language.

Advantages:

- Flexibility: Handles various tasks without retraining.

- Speed: Quick deployment for new types of queries and problems.

Limitations:

- Accuracy Variance: It may be less precise than trained models.

- Dependence on General Knowledge: Based on the quality of training data.

Real-World Application

Imagine a marketing firm that uses an AI tool to monitor brand sentiment across social media. Without needing specific examples of every potential phrase or sentiment trend, the AI can leverage its general knowledge to classify and report on sentiment, aiding in rapid response strategies and marketing adjustments.

This adjustment keeps the structure of your explanation while tailoring the example to be more relevant to text classification tasks. This helps illustrate how Zero-Shot Prompting can be directly applied to real-world business and technology scenarios.

Example:

We did not supply the model with text examples and corresponding classifications in the prompt. The language model already comprehends the concept of “sentiment” due to its zero-shot capabilities.

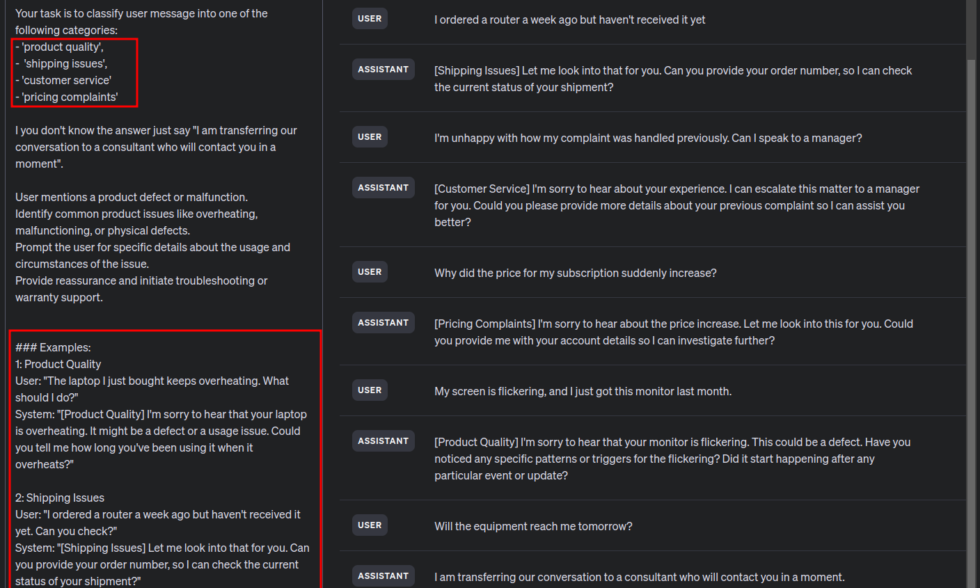

Few-Shot Prompting: Tailoring AI Response with Minimal Examples

Few-shot prompting is a technique where a language model provides a few examples before being asked to perform a task. This method helps the model understand the desired output format or the context of the query better. The examples act as a guide, making the AI’s responses more accurate and contextually appropriate without extensive retraining or data.

Advantages:

- Improved Accuracy: By seeing examples of the task at hand, the model can generate more accurate responses tailored to the specific requirements of the scenario.

- Efficiency: Few-shot prompting allows for rapid adaptation to new tasks or changes in context with minimal input, saving time and resources in training.

- Scalability: Solutions across various domains can be scaled more easily by adapting the few-shot examples to new content areas without overhauling the entire system.

Limitations:

- Quality of Examples: The effectiveness of Few-Shot Prompting heavily relies on the quality and relevance of the provided examples. Poor examples can lead to misguided responses.

- Limited Scope: While effective in tasks with well-defined output structures, this method might struggle with highly complex or novel scenarios not well-represented by the examples.

Real-World Application: Text Classification in Customer Feedback Analysis

Consider an e-commerce company that uses an AI tool to classify customer reviews such as ‘product quality’, ‘shipping issues’, ‘customer service’, and ‘pricing complaints’. To enhance the AI’s accuracy in recognizing and categorizing new types of feedback, the team employs Few-Shot Prompting. They provide the model with a small set of example reviews for each category, clearly annotated. This approach trains the AI to understand the nuances of customers’ language when expressing satisfaction or frustration in each area.

With these few examples, the AI learns to filter and classify vast amounts of incoming customer feedback more efficiently. This speeds up the response process and helps the company quickly identify common issues and trends, potentially improving customer satisfaction and business operations.

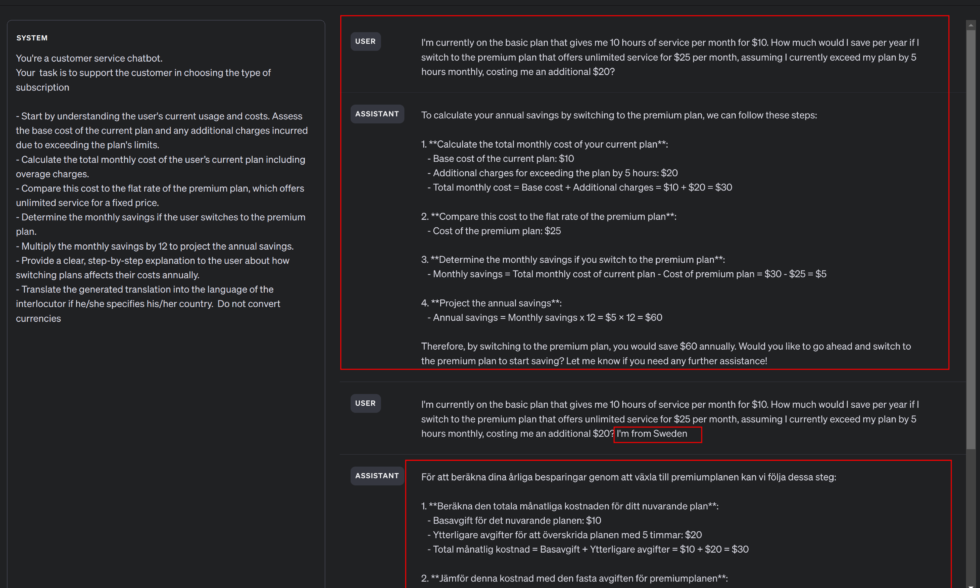

Chain-of-Thought Prompting

This technique guides a language model in articulating its reasoning process step-by-step. It’s particularly useful for tasks that require logical deduction, problem-solving, or complex decision-making. By explicitly prompting the model to “think out loud,” we can trace how it arrives at its answers, which aids in accuracy and trustworthiness.

Advantages:

- Improved problem solving: Reaches accurate conclusions by parting problems into steps.

- Transparency: Users see the reasoning behind answers.

- Educational Value: Helps users learn problem-solving approaches.

Limitations:

- Quality of training: Depends on diverse and quality training data. If the training data adequately covers the range of topics or the types of reasoning required, the model may be able to generate useful and correct chains of thought.

- Complexity and computation costs: Higher computational demands could slow real-time applications.

- Complexity in prompt design: Requires careful crafting to avoid misguiding the AI.

- Inconsistency in reasoning: Even with the same input, CoT prompting might generate different reasoning paths, sometimes leading to varying conclusions. This inconsistency can be confusing to users who are expecting deterministic and reliable AI system outputs.

- Vulnerability to cascading errors: Errors in early reasoning steps can cascade, leading to incorrect conclusions. Since each step builds on the previous one, an error at any point in the chain can compromise the entire output.

Real-world Application Example: Customer Service Chatbot

Imagine a customer service scenario where a user needs help deciding whether a subscription plan is right based on their usage patterns.

Reflection Prompting

This approach encourages a language model to review its previous outputs, analyze the reasoning or decisions made, and explore how these might be improved in future interactions. This continuous loop of action, reflection, and adaptation is crucial for developing models that can evolve and respond effectively to feedback.

Advantages

- Enhanced Learning: Models are not static. Models adapt based on feedback. They can continue to learn from each interaction. Reflection prompting facilitates this by encouraging models to consider their responses and adjust based on feedback or new information.

- Increased Accuracy: By continually refining their approaches, models can reduce errors and increase the accuracy of their outputs, especially in complex domains where context and nuance are critical.

- Greater user trust: Users may trust models that demonstrate learning and improvement more. This is particularly important in fields where decision-making must be transparent and reliable.

Limitations

- Accurate Feedback: Learning and improvements depend on the relevance of feedback. The model’s ability to learn and improve is only protected if the feedback is accurate, relevant, and biased.

- Increased complexity and overhead: Implementing reflection prompts effectively requires additional system complexity. It generates responses and mechanisms to gather, process, and integrate feedback.

- Potential for overfitting: If a model continually adjusts its responses based on a limited set of feedback or from a non-diverse user base, there is a risk of overfitting to specific types of feedback or biases.

- Difficulty quantifying improvements: Measuring the effectiveness of reflections and subsequent response adjustments can be challenging. Depending on the context and nature of the feedback, the improvements might be subtle or subjective.

- User privacy and data security concerns: Effective reflection often involves storing and analyzing user interactions, which can raise concerns about user privacy and data security.

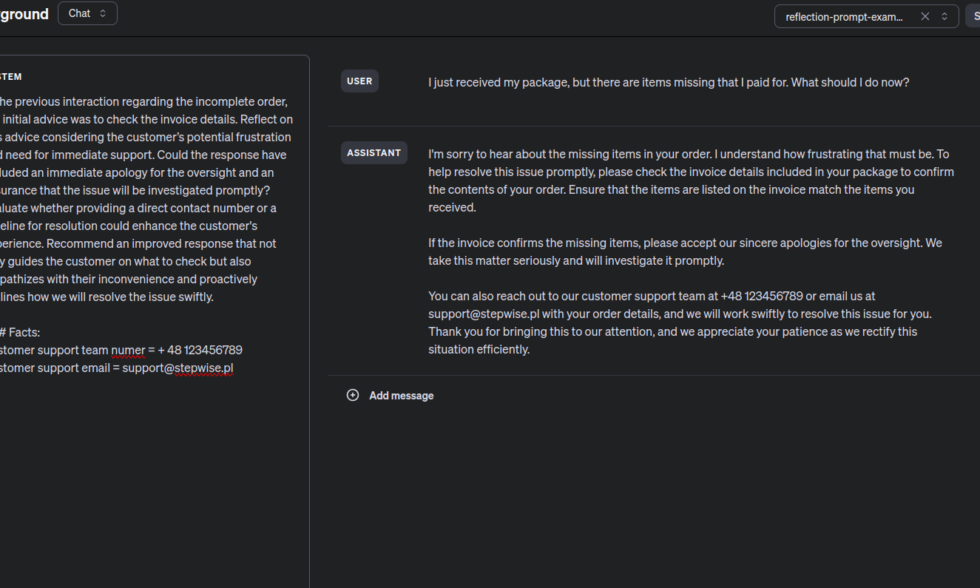

Example of Reflection Prompting

Here’s a system prompt designed for a language model that utilizes the Reflection prompting technique, specifically tailored for a customer service scenario where the initial response was about an incomplete order. In the example, for simplicity’s sake, I assume that this is a continuation of the conversation.

Tree of Thoughts Prompting

This technique leverages the metaphor of a “tree” to structure the decision-making process. Each “branch” of the tree represents a different line of reasoning or a possible answer to a part of the question. This enhances the depth of the analysis and allows the model to present multiple perspectives or solutions, ultimately selecting the most robust or logical conclusion.

Advantages

- Comprehensive analysis: Enables the model to explore a variety of potential answers or solutions, ensuring that the response is well-rounded and considers multiple viewpoints.

- Enhanced problem solving: It is particularly useful in complex scenarios where simple linear reasoning might miss critical nuances. ToT allows for a deeper exploration of the problem space.

- Improved decision-making: By evaluating different outcomes and reasoning paths, the model can make more informed decisions, which is crucial in fields requiring high levels of accuracy and reliability.

Limitations

- Increased computational complexity: ToT requires the model to generate multiple reasoning paths simultaneously, which can significantly increase the computational complexity and resource demands.

- Difficulty in managing and synthesizing branches: Managing multiple branches of thought and synthesizing them into a coherent conclusion can be challenging for both the model and the developers designing these systems.

- Quality of branch exploration: The effectiveness of ToT depends heavily on the quality of each branch’s exploration. If some branches are not thoroughly explored or are based on inadequate or biased information, the overall decision quality can suffer.

- Scalability issues: As the complexity of the problem increases, the number of potential branches can grow exponentially, making it difficult to scale the ToT technique efficiently.

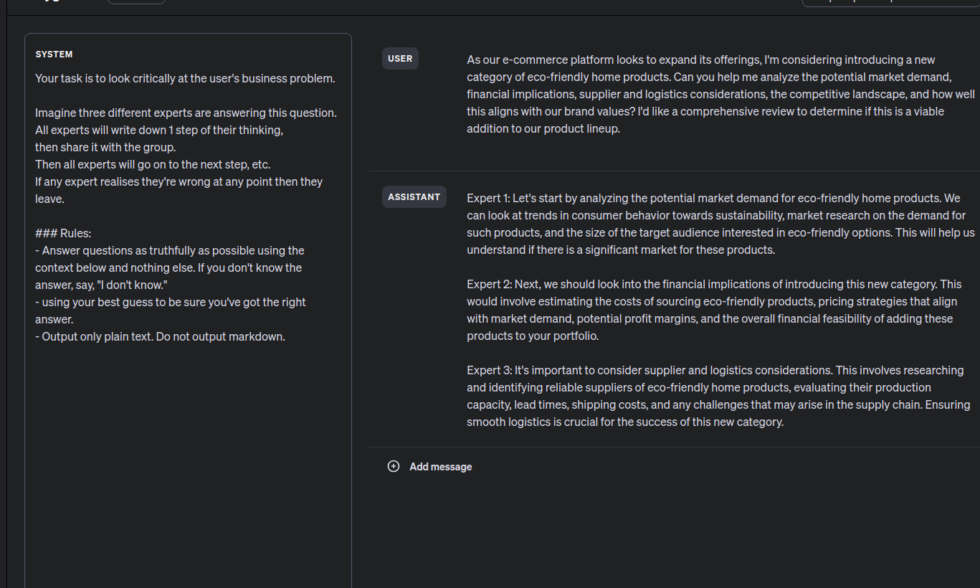

Application Example: E-commerce Scenario: Introducing a New Product Category

An online retailer is considering expanding its product offerings by introducing a new category of eco-friendly home products. Using the Tree of Thoughts prompt technique, the company’s decision-making process can be structured to evaluate this strategic move comprehensively.

In the above example, I used the prompt proposed by Dave Hulbert (2023). This method utilizes the foundational idea of ToT frameworks to develop a straightforward prompting technique that guides the LLM to assess intermediate thoughts within a single prompt.

Links https://www.promptingguide.ai/

Prompting “magic words” that improve language models’ reasoning ability https://github.com/holarissun/PanelGPT