Text analysis is a smart Machine Learning technique that resolves many issues related to large sets of text data. It can be applied in multiple fields of science and business with local resources or cloud services. I compared two technologies, Python and Big Query, to check which solution is better. The results of my experiment may come as a surprise to you!

What is text analysis?

Text analysis is an indispensable element of Data Science and Machine Learning to easily and quickly analyse large sets of unstructured text data. In this way, decision makers can get the data and information they need exactly at any level of organisation. Meeting these needs should be the objective of any programmer who creates and develops business software.

What content can be analysed?

Any electronic texts can be processed: press releases, comments in internet forums, e-mails, social media posts etc.

Text analysis has been gaining in importance for several years. The popularisation of Machine Learning solutions and access to the constantly growing sources of data on the Internet are promoting text mining tools and technologies.

Text analysis using Machine Learning has a wide range of applications. It is commonly used in business as it is beneficial regardless of the branch. Text analysis facilitates:

- sentiment analysis to determine polarisation, e.g. customer attitudes toward brands, or a given candidate or party in upcoming elections,

- topic analysis to help algorithms automatically assign categories to texts based on the detection of specific phrases, and to indicate what the text is about,

- keyword extraction to detect the number of articles that deal with a given topic and contain the largest number of particular key phrases, or texts that contain the name of a company (social listening),

- early detection of issues on the basis of specific words, phrases and expressions in a text (e.g. detection of difficult customers based on comments and emails).

Preparing data for analysis

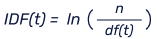

Nearly every text analysis requires some content preparation, e.g. deleting unnecessary signs or words, converting text into lowercase, lemmatisation or stemming. Machine Learning algorithms very often need text vectorisation. One popular method of vectorisation is TFIDF (term frequency-inverse document frequency, https://nlp.stanford.edu/IR-book/html/htmledition/tf-idf-weighting-1.html ).

TFIDF represents the weight of a particular word from its frequency in a text. This helps in determining the importance and relevance of a given word in a document.

How can you select the best solution for text analysis?

In order to identify the best methods for the implementation of text analysis, a variety of issues can be taken into account. For the needs of this article, the following three factors were considered:

- implementation time,

- implementation convenience,

- computational time.

Before discussing the results, one issue is worth being explained. This assessment is subjective, and each of the above mentioned factors can be optimised, as can programmer skills, which is why the final outcome may change. They should be investigated individually in each project.

When preparing this experiment, it was necessary to make compromises and to not follow additional optimisation methods. In order to maintain a fair comparison, similar conditions were provided for every solution.

I selected the two popular methods of text analysis:

- implementation in Scikit-learn library in Python,

- implementation in SQL using BigQuery.

Text analysis with Scikit-learn in Python

Python is one of the most common programming languages (https://www.tiobe.com/tiobe-index/, https://pypl.github.io/PYPL.html), often recommended as a comfortable and straightforward language for Data Scientists. Python offers a great number of libraries for data analysis and data science, e.g. Scikit-learn – a popular library implementing multiple methods for Machine Learning and data processing, such as TFIDF.

This library easily integrates with other tools, e.g. SciPy and NumPy, and can be found in other Python libraries (e.g. TensorFlow). Scikit-learn was chosen mostly for its popularity and ease of use.

Implementation

For calculating TFIDF the following class was used: sklearn.feature_extraction.text.TfidfVectorizer in the following way:

from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer() vectors = vectorizer.fit_transform(docs) feature_names = vectorizer.get_feature_names_out()

The input of the function is the vector of the analysed text documents. This class enables text pre-processing, but that was not used in this solution. In the TFIDF method in the Scikit-learn library, a fixed value of 1 was added to the values taken into account when determining Idf, changing the original formula:

to:

(where n is the total number of documents in the document set, and df(t) is the number of documents in the document set that contain term t) which prevents the risk of division by 0. This option can be controlled, using the smooth_idf parameter. The vectors returned by the function are normalised by default in such a way that the sum of the squares of the vector elements is 1. This option can be controlled using the norm parameter.

Text analysis in SQL on BigQuery

BigQuery is one of the most frequently used data warehouses in a variety of branches. This is a very intuitive and easily scalable database in the cloud. The BigQuery service is included in the Google Cloud Platform for advanced analytics of huge data resources (Big Data) in real time.

It is possible to analyse data stored directly in BigQuery or use external data sources such as Cloud Storage, Bigtable, Spanner, or Google Sheets, stored in Google Drive. In order to use BigQuery, knowledge of SQL is required, which was used in the method described in this article.

Implementation

The TFIDF method was implemented following information on the website https://stackoverflow.com/questions/47028576/how-can-i-compute-tf-idf-with-sql-bigquery as follows:

WITH words_by_post AS (

SELECT users_ID, REGEXP_EXTRACT_ALL(tweet, r'[a-z]{2,20}\'?[a-z]+') words

, COUNT(*) OVER() docs_n

FROM `your_project.dataset.table`

), words_tf AS (

SELECT users_ID, word, COUNT(*) / ARRAY_LENGTH(ANY_VALUE(words)) tf, ARRAY_LENGTH(ANY_VALUE(words)) words_in_doc

, ANY_VALUE(docs_n) docs_n

FROM words_by_post, UNNEST(words) word

GROUP BY users_ID, word

), docs_idf AS (

SELECT tf.users_ID, word, tf.tf, ARRAY_LENGTH(tfs) docs_with_word, LOG(docs_n/ARRAY_LENGTH(tfs)) idf

FROM (

SELECT word, ARRAY_AGG(STRUCT(tf, users_ID, words_in_doc)) tfs, ANY_VALUE(docs_n) docs_n

FROM words_tf

GROUP BY 1

), UNNEST(tfs) tf

)

SELECT *, tf*idf tfidf

FROM docs_idf

ORDER BY users_ID DESC

This implementation allows for grouping documents from one user.

Which solution for text analysis is better – conditions

Before discussing the results, it is worth mentioning where the data for the test came from. A common source of text data are social media, such as Twitter, which provides information for analysing changes in opinions of users, their behaviour, social networking trends etc. I carried out the analysis from posts regarding COVID-19 published on Twitter (https://www.kaggle.com/code/kerneler/starter-us-covid-tweets-ae6f6bd5-f/data). The dataset consisted of 1,385,459 tweets. In this analysis, clean data was used (deleted punctuation marks, special characters and numbers, uppercase changed to lowercase, deleted stopwords).

When analysing Twitter, we can compare particular tweets or treat a set of tweets of one user as one document. In this comparison, both approaches were used. We wanted to check the time of processing of each. Due to a lack of source information about the users, experimental users were randomly assigned to tweets, maintaining a normal distribution. Users were necessary in this comparison in order to check how text grouping influences the computation time.

Due to a small number of tweets, an additional set of artificially generated messages was used. 50,000 English words were arranged in random tweets (the words were selected randomly, maintaining an exponential distribution) with lengths drawn from a uniform distribution from 1 to 20. Each user was assigned 100 tweets.

The following data sets were included:

| number of tweets | number of users | dataset type | dataset size | |

| tweets_100K | 100,000 | 1,000 | artificial | 11MB |

| tweets_1M | 1,000,000 | 10,000 | artificial | 111MB |

| tweets_10M | 10,000,000 | 100,000 | artificial | 1,1GB |

| tweets_100M | 100,000,000 | 1,000,000 | artificial | 12GB |

| tweets_covid | 1,385,459 | 10,000 | real | 190MB |

The computations in Python were made on a virtual machine on Google Cloud Platform (machine type N2 – CPU platform Intel Cascade Lake, 4xCPU, 16 GB RAM). The test for the data of 100M tweets (12GB) were carried out on a machine with 8xCPU and 64 GB RAM.

Which solution for text analysis is better – results

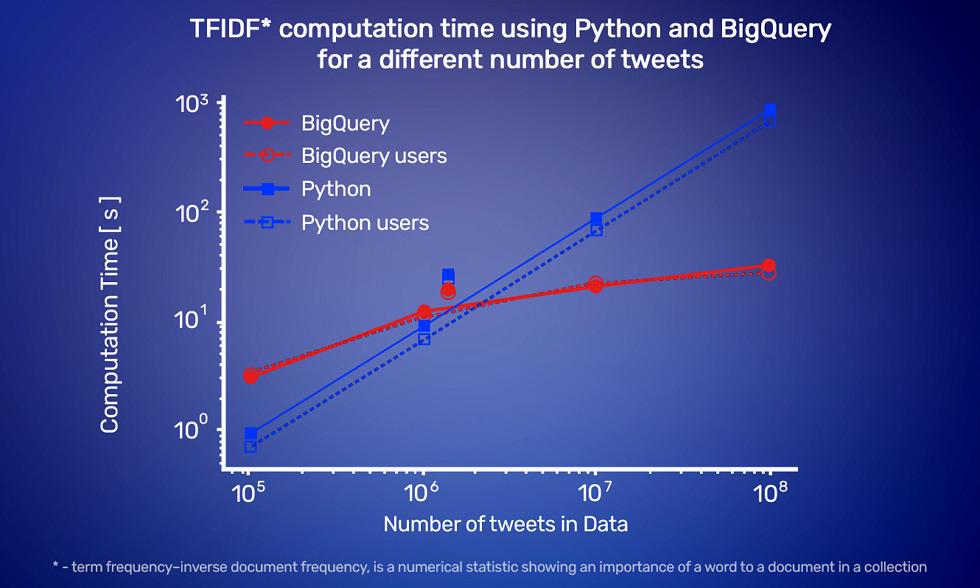

For small datasets (number of tweets below 1 million), the computation time of TFIDF vectors is shorter with the Scikit-learn library in Python. For large datasets, this time is shorter with BQ. Interestingly, the computation time in Python grows linearly with the number of analysed documents, and in BQ much less (approximately logarithmically).

The computation time, including data with tweets grouped in documents, behaves in a similar manner, yet in the implementation in Python, a grouping time of approximately 10% of the computation time not included in the graph must be added to the computation time of the processed data.

The computation time for real data is longer than in the case of artificially generated data, which may result from a different distribution of the length of tweets or a larger number of words used.

The longest part of the computation in BQ is making wordsets by REGEXP method, which constitutes about 80% of the computation time. In fact, when analysing unclean data (with special characters, punctuation marks, numbers), the REGEXP query works for approximately the same time, without forcing an additional stage of data processing.

Scikit-learn library facilitates a quick implementation of the TFIDF method, but the computation time of large datasets is significant. Big Query resolves this issue. Although the implementation may take more time, the results for large datasets can be obtained considerably faster.

The measurements were just for the TFIDF computation (they did not include the time of uploading data to memory). The computations in Scikit-learn required combining the tweets of particular users in documents, which was easily done in BQ by grouping. Using BQ can significantly speed up data analysis.

The comparison of Python and Big Query and specifying clearly which solution is always better for text analysis was not possible. Each of the tested solutions has its pros and cons, and many of these issues can be corrected after optimisation. I do not want to end this article without a valuable reflection and summary. Having completed many projects and this experiment, I can recommend one thing: when analysing a text, it is always worth using the solution which meets customer or user needs and technology developed in a given project best.